Differentiated project

PARTICIPATION DATA

RECORD-KEEPING

For each lesson where new content or skills were presented, participation was tracked and scored from 0-3 for each student. Originally, the 3-point scale was designed to reflect student activity at the beginning, middle and end of class when I was issuing pre-quizzes at the start of class, inquiry activities during class and exit tickets at the end of class. Students earned one point for completing each activity; their earned score would reflect their degree of engagement each day. Asynchronous students completed and submitted the webform-based inquiry lessons to similarly earn up to 3 points per lesson. The synchronous and asynchronous scores earned in this category did not reflect an evaluation of their performance, rather they measured demonstrated effort.

Detailed, accurate record-keeping was essential to ensure students received the credit they earned. The pre-prequizzes I administered were based in the CK-12 system which automatically keeps a record of student attempts and scores. The inquiry activities they completed on their whiteboard slides were saved as .pdf files for future reference. The exit tickets I issued were collected with BookWidgets, again, because I was able to track student participation. When students contested an engagement score for a specific day, I was able to not only refer to the handwritten notes I took during class, but I was also able to access the work they completed. Where they did not complete any work or portions of the work, I was able to produce empty slides with their names on them and images describing no attempt on the pre-quizzes and/or exit tickets. Additionally, this systematic approach for note-taking and documentation in conjunction with the increase in one-on-one time I had with students during class time allowed me to very quickly and easily determine which students were present-not-participating (PNP). In large part, the students I identified in September as exhibiting those behaviors did not change their behavior at any point throughout the school year.

At the end of Quarter 1, the participation score comprised approximately 24% of the recorded progress grade. At the end of Semester 1, the participation score comprised approximately 15% of the recorded course grade. The disparity here is due to the fact that more frequent graded, common assessments were assigned during Quarter 2 than Quarter 1.

It was my hope that these scores would encourage students who show consistent effort in class but who may not always earn high scores on standardized assessments. Yet, I wasn't seeking to make this portion of the grade so high that it would create an inaccurate final grade for those students who did show mastery on standardized assessments but who routinely did not participate in classwork. I believe this amount, 15%, satisfied both those criteria. For this reason, it is a system I'd like to continue in the future.

participation performance

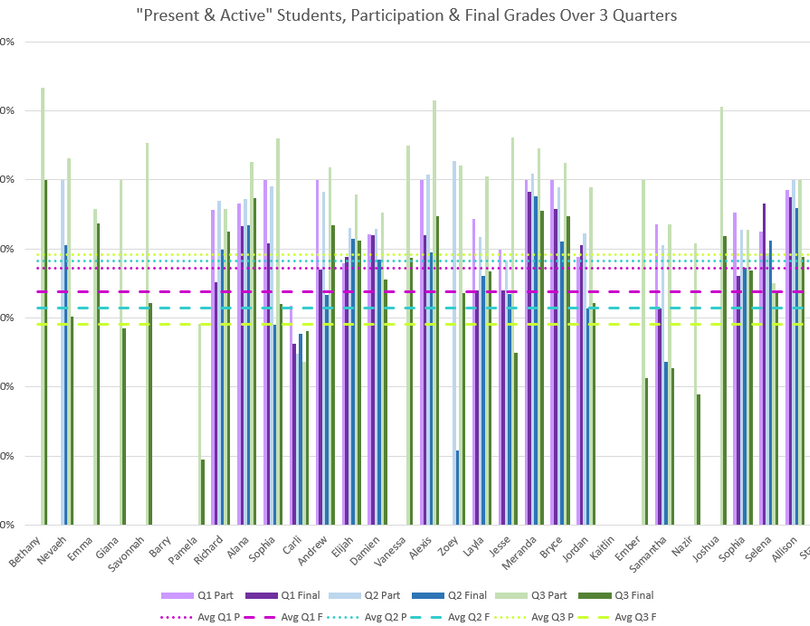

When the participation subtotal score was plotted with the recorded quarter grade for each student, the data supports what educators know to be true; when students engage in the learning process, they can more easily achieve mastery.

For students with high final Quarter 1 scores (> 80%), their participation scores nearly match their earned final score. It was during this quarter we observe the highest engagement of the year; nearly half of the classlist earns high (>75%) participation scores. Even students earning average (60%-75%) final quarter scores, demonstrated high classwork participation scores. There was only one student who was able to earn a passing final quarter score without earning a passing participation score.

Similar Quarter 2 data reveals less consistency in participation. Many failing students earned high participation scores. I can attest that these students weren't just demonstrating effort during class time; they showed mastery of concepts and skills in their classwork. However, they just never completed required, standardized, common assessments in the LMS.

More generally, I observed high participation scores relative to the earned final scores. This was expected and is explained by the high-level, relational concepts and skills introduced during the second quarter. Here, every student with an earned final score greater than the participation score is either asynchronous or routinely present-not-participating (PNP).

The disparity observed between participation classwork and earned final scores is even greater in Quarter 3. With the exception of five students, participation scores remain high. In fact, average participation scores were observed to increase with progression of the school year! Yet, these earned final progress scores are among the lowest of the year. Again, a closer look at individual gradebooks reveals that this is not in any way a reflection of learning but, rather, a reflection of students' neglect to submit required, standardized, common assessments in the LMS [despite multiple reminders to do so within the time provided].

The disparity observed between participation classwork and earned final scores is even greater in Quarter 3. With the exception of five students, participation scores remain high. In fact, average participation scores were observed to increase with progression of the school year! Yet, these earned final progress scores are among the lowest of the year. Again, a closer look at individual gradebooks reveals that this is not in any way a reflection of learning but, rather, a reflection of students' neglect to submit graded, standardized assessments in the LMS [despite multiple reminders to do so within the time provided].

The fine dotted lines in each of the following three graphs demonstrate the participation among various groups within the classlist over three quarters. Within the "Present & Active" group of students, the average participation was between 70% and 80%, increasing slightly from Quarter 1 through Quarter 3. Participation was comparable for the "PNP" (present-not-participating) students during Quarter 1, but I observed a dramatic decline in Quarter 2 followed by virtually no change in Quarter 3. The asynchronous group of students who earned participation credit by completing classwork independently and submitting through the LMS showed the least consistency. For Quarter 1, participation was nearly equal to the "Present & Active" group of students. It increased in Quarter 2 and decreased significantly in Quarter 3.

STUDENT SURVEY DATA

At the start of this school year, I adopted multiple different technology tools to facilitate lesson planning and delivery. I also began to practice teaching strategies that were entirely new and unsettling as I practiced them.

At the end of each quarter, I collected anonymous survey data to gauge my students’ thoughts and feelings about the course content, lesson delivery and technology tools I introduced. Each quarter, I provided a different survey style.

The following graphs represent raw survey data from 22 out of a possible 45 students during November 2019.

My Quarter 2 survey was bit different. Instead of asking focused questions with multiple-choice responses, I had hoped for more personal and authentic descriptions from my students regarding their feelings about the course content, how live session time was being used and their perception of me as a teacher. With open-ended answers limited to single words or small phrases, I assembled word clouds with the hope that many students felt similarly about their experience.

The following word clouds represent the responses of 19 students at the start of February 2020.

The final survey I issued includes the same questions I ask students at the end of every academic year. This year, due to changes in my lesson planning and execution, I was particularly interested in the responses pertaining to the degree of challenge, their perceived preparedness for assessments, their perceived ability to take ownership of their learning and their perception of the classroom atmosphere.

Only 16 students responded to the survey within the one week I provided them to complete it. Though the survey was anonymous like all the others, I expect only the most "Present & Active" students participated.

This feedback and that which was collected in each survey is encouraging and provides further support for my continued use of an student-centered, inquiry-based classroom approach for chemistry at Agora.

UNSOLICITED STUDENT FEEDBACK